Mixed Reality Capture Studios: A Look Inside

360 degree video playing on a head mounted display is not really virtual reality, despite widespread public confusion. Video cameras capture content that has a locked in perspective- you can’t look around an object, and 360 video is no exception.

For virtual reality to feel like a reality, you need to have a sense of being able to change your viewing perspective, and that is something that is technically impossible with just simple video. That’s why game engines are used to create virtual reality scenes. They’re great at creating fully 3D environments that you can walk around in, just like in real life.

There’s a downside to using a game engine vs a video solution, however- and that is realism. Not to say video game engines haven’t come an extremely long way in the level of graphic fidelity they can produce; they look stellar! But there’s still situations where you want to capture real life exactly how it looks in 3D- in other words, volumetric video.

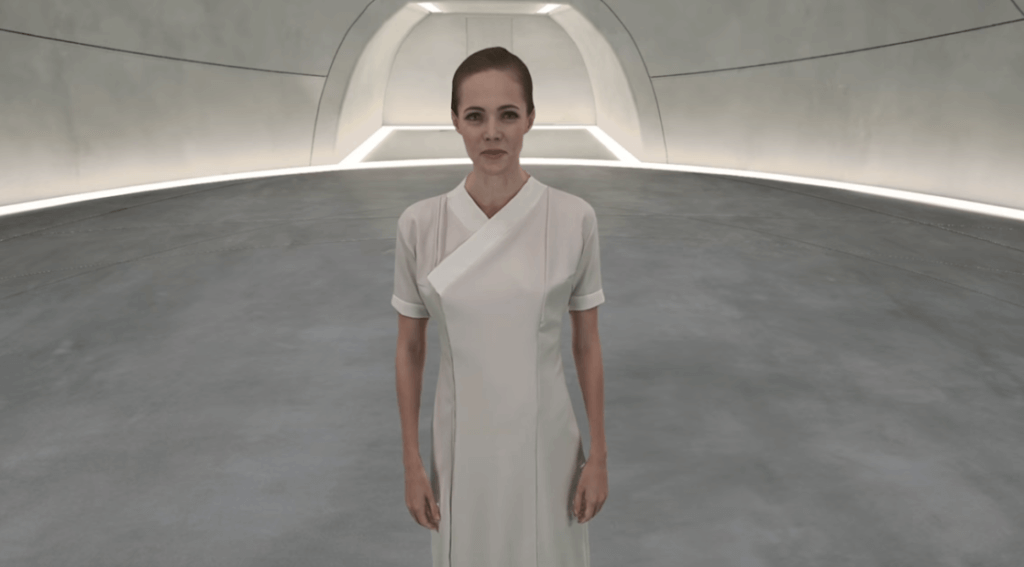

Microsoft has come up with a way to capture real human performances in 3D space, ready to use in holograms and virtual reality. It’s calling it’s volumetric capture solution the Microsoft Mixed Reality Capture Studio.

There’s four studios open at the moment- one in Redmond, one in San Francisco, and others in London and Los Angeles. Third parties can book times at any one of these locations to capture holograms to use in a HoloLens application, in virtual reality, or even in a normal old 2D interactive experience.

The result of one of these capture sessions looks like video from any given viewpoint- but since the capture exists in 3D space, you can completely change the view point after, exactly like a 3D model. The captures are delivered as raw .obj/.png files, with detail in the range of 40k triangles per character.

Mixed Reality Capture Studios seem to be another way Microsoft is paving the way into what we believe is the next generation of computing, along with their efforts in HoloLens and Azure. NASA even used this tech to put Buzz Aldrin on Mars in their HoloLens app “Destination: Mars.”

But with any cool new technology comes imperfections- and volumetric capture stages are no exception here. Microsoft’s system, much like the Kinect and HoloLens, is based on infrared depth sensors. This system works really well when scanning a room with a HoloLens, but when trying to accurately determine the shape of something, man factors can affect an objects ability to properly reflect the infrared light back at the sensors.

Infrared sensors can have problems with shiny materials, black materials, leathers, glass, and plastics. Sometimes, two seemingly identical items- like two black t shirts- can be made of different enough material that one captures successfully and the other doesn’t. This also applies to make-up that would normally be used in film to prevent shine and to hide imperfections: there’s restrictions on what can be used.

This can lead to complications for the Directors, Production Designers and and Stylists working on a Mixed Reality Capture set. At $20,000 dollars a day, you don’t want to spend most of your time trying to figure out what works and what doesn’t.

But even with those limitations in mind, its easy to see the implications of such a technology in the future of film making and interactive experiences.